Designing an AI-Native Design Tool

Early reflections from building Bloom's MVP

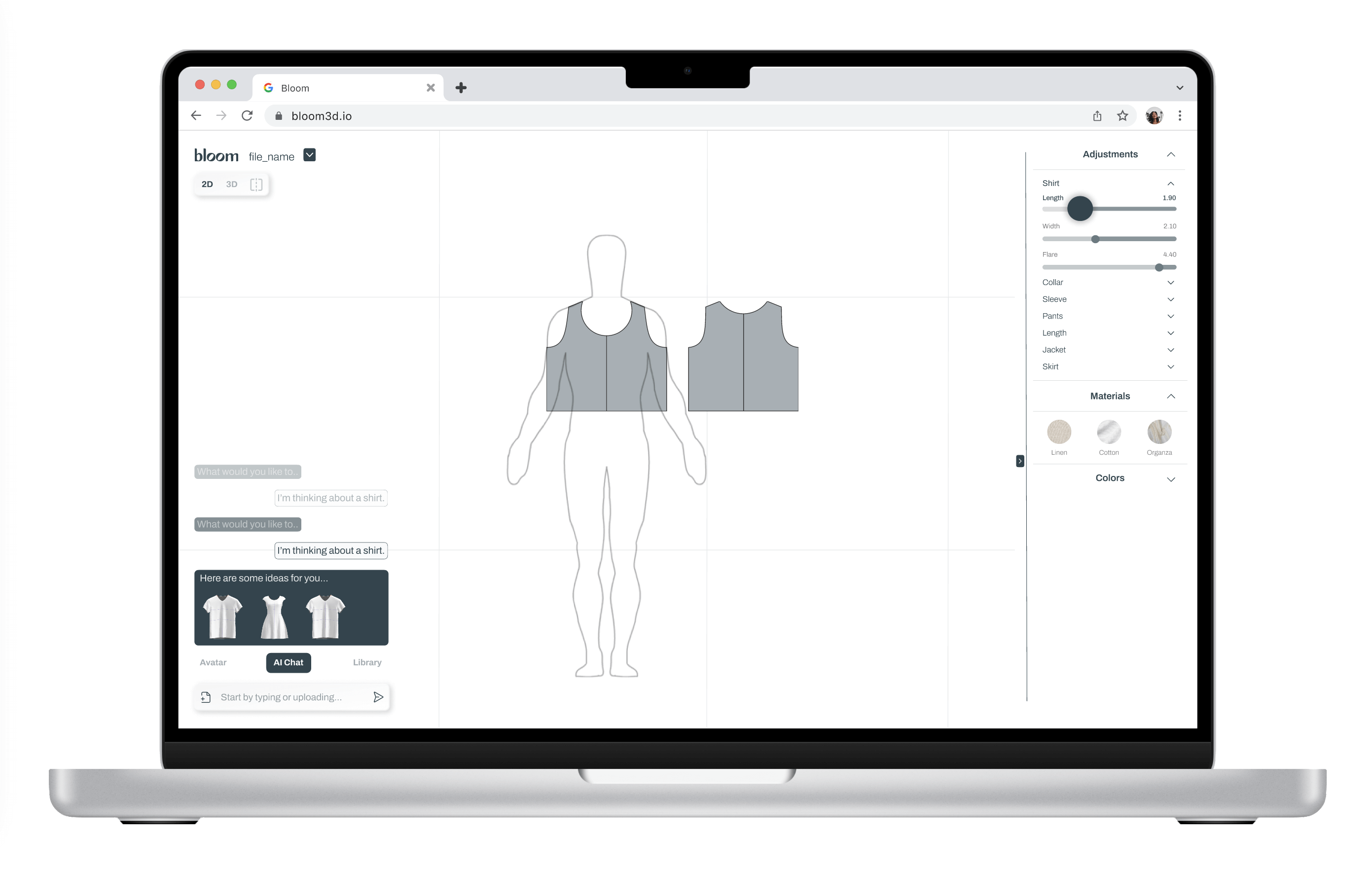

Bloom is a browser-based, AI-native design tool that enables fashion designers and pattern makers to generate garment patterns from text or image prompts–while retaining full editing control.

Over the past two months, I partnered up with another designer in a founding team of 6 to build the MVP—navigating challenges unique to AI-first tools, rapid iteration cycles, and constrained resources.

Unlike traditional UX projects, AI-native tools raise unexpected design questions:

- How do we balance conversational interfaces with the clarity of graphical UIs?

- How to design AROUND AI by building flows that compensate the current limitations of AI?

- What happens when the product leads with a solution, not a problem?

This case study is a reflection on designing through ambiguity, prototyping at speed, and shaping tools for workflows that are still emerging.

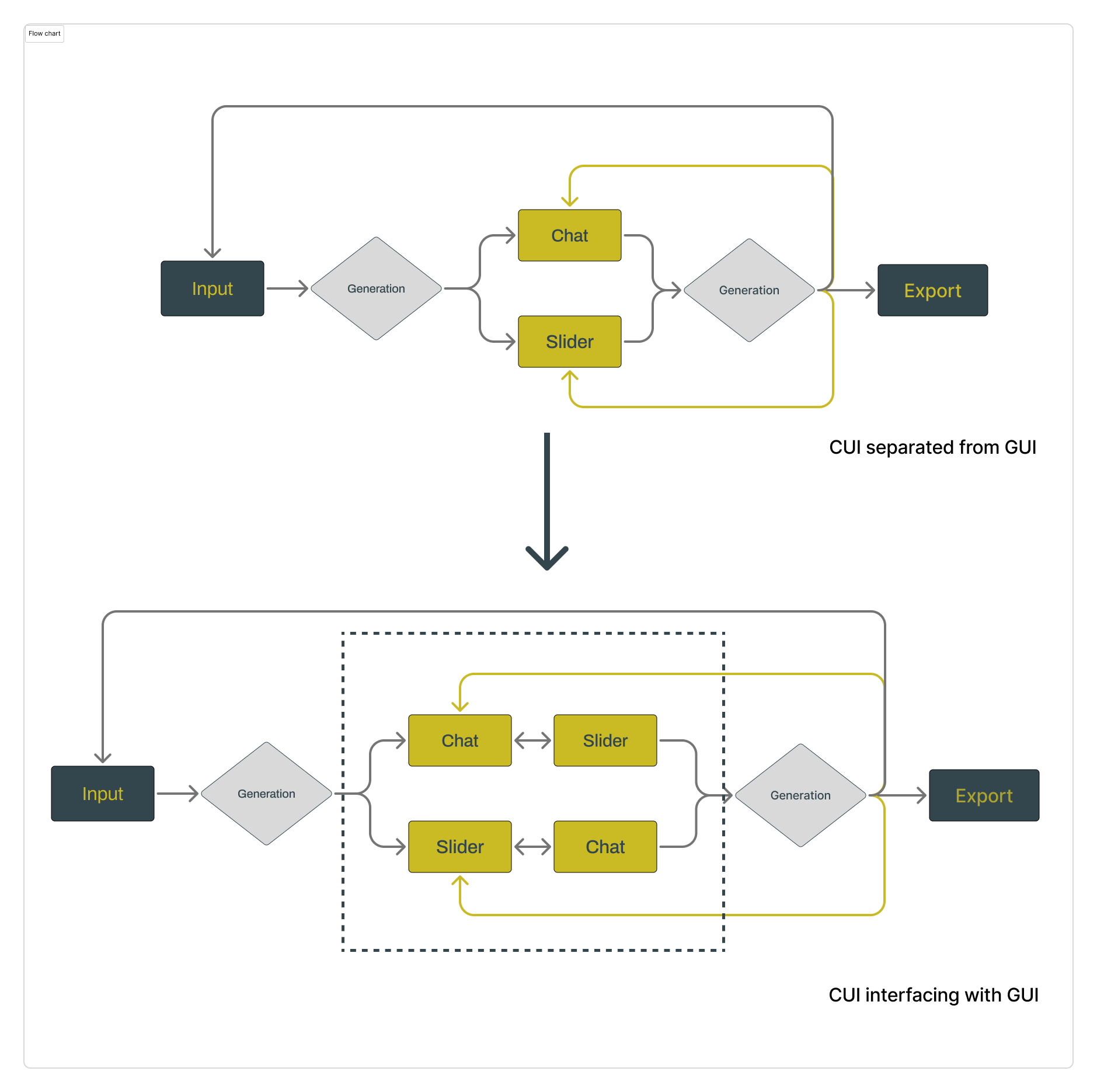

1. Balancing CUI and GUI

Let Real-world Workflows Shape the Interface

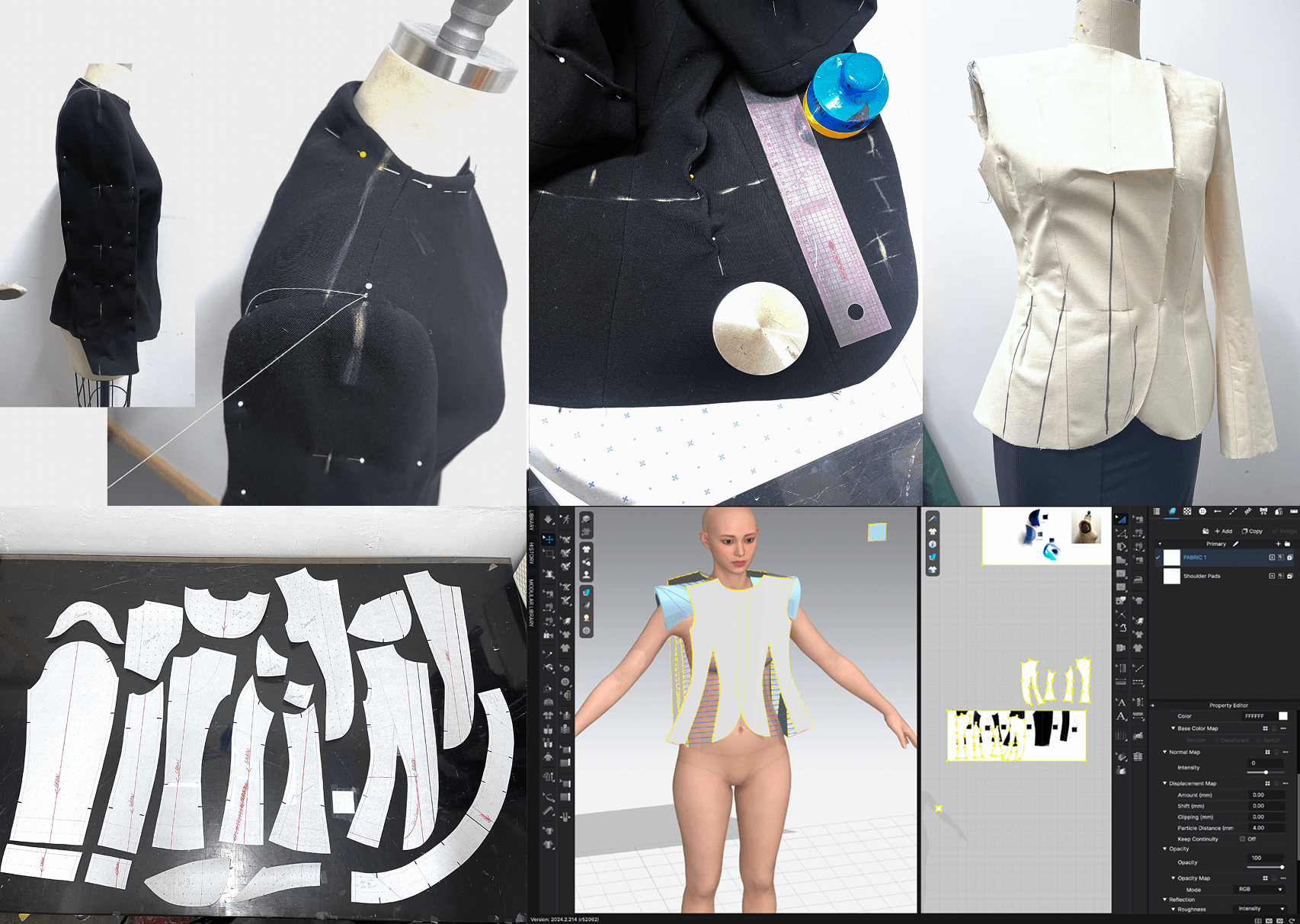

We started out by observing how a jacket is made and digitized.

We knew from the start that Bloom needed both a conversational interface (for fast ideation) and a graphical interface (for precise control). But we weren't sure how to integrate them in a way that felt intuitive.

Discovery

To find out, I conducted interviews across the fashion pipeline:

- An in-house designer at Burberry shared that their workflow is highly conversational.

- A factory technician walked me through 8 rounds of revisions for a single garment. Iteration is high-volume and deeply contextual.

- An independent designer emphasized the need to get the big picture down before bringing in a $200/hr pattern maker. Speed and rough outlines are more valuable early on than perfection.

A factory technician walking me through how she communicates revisions.

These conversations had a common thread: users start with intent, then shift into precision. This insight directly shaped our decision to build a conversational-to-control flow.

We also uncovered hidden setup flows that professionals rarely mention explicitly, such as: customizing avatar size and body measurements, as well as using pattern libraries.

Action

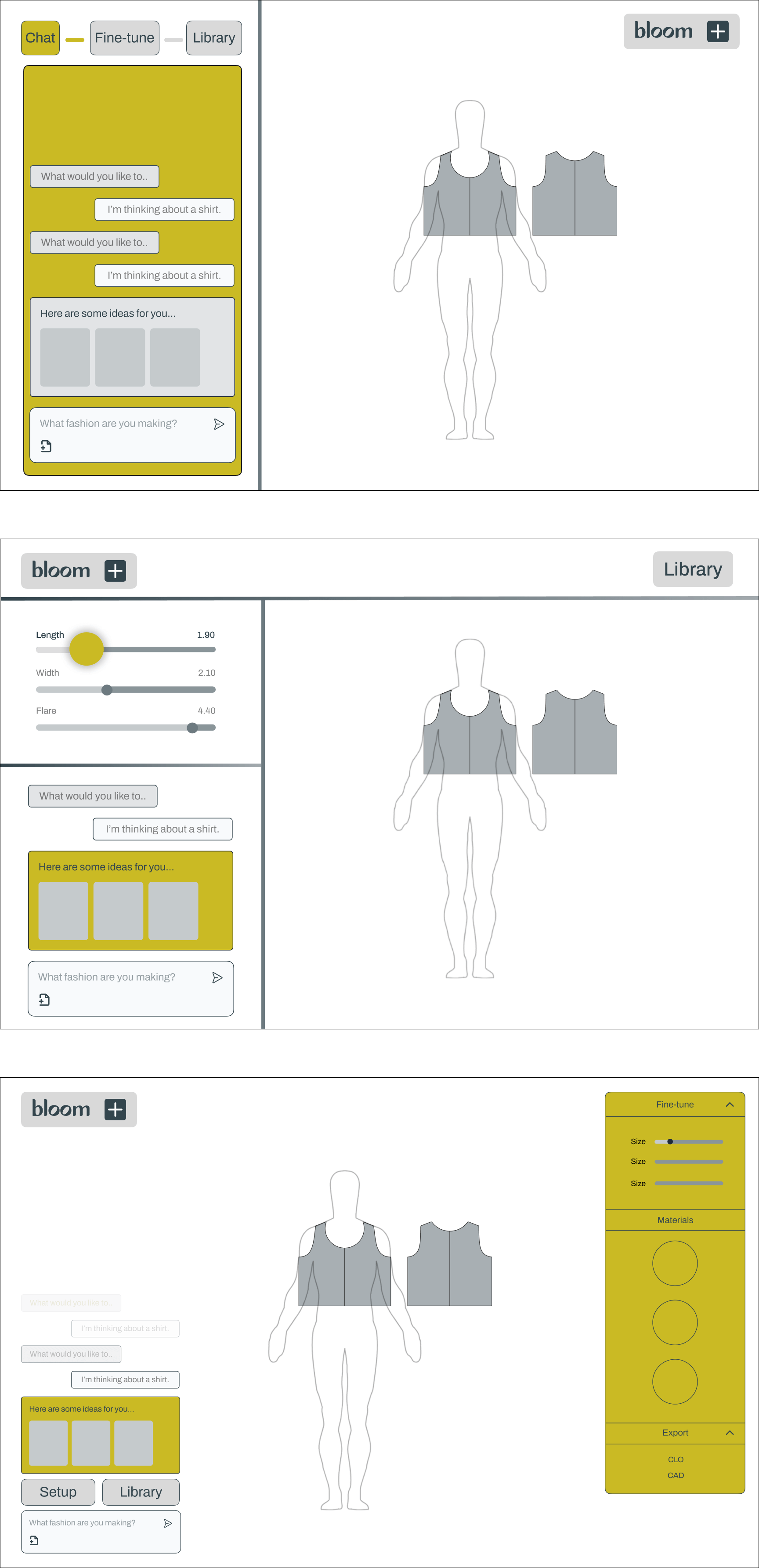

We tested multiple layout configurations, including: a single combined panel (which became visually dense), modal input flows (which broke interaction flow), and a final split-screen model: CUI for intent-setting, GUI for adjustments, colors, and materials.

We also embedded common setup actions (e.g. avatar presets, measurement tools) upfront in the flow. Below are three configurations we iterated on:

We brainstormed several layout configurations.

As we conducted more interviews, We started refining our interaction loops to better align with how a designer think.

Result

The final layout accomodated the dialogical relationship between CUI and GUI. It also mirrored how designers think: ideation and refinement happen in parallel, but engage different modes—natural language for intent, precision tools for control.

2. Scaffold

Designing An Input Flow that Compensates for AI Limitations

Designers who desperately try to understand how big the AI is by swallowing it.

At Bloom, users can start a design with either an image or a text prompt. But our AI model couldn't yet detect key garment attributes from an image (e.g., upper body vs lower body, menswear vs womenswear, cut types). That meant users needed to specify these features manually before entering the main design interface.

Task

The pre-generation flow needed just the right amount of structure. Too much friction, and users drop off; too little, and the AI outputs fall apart. Our goal was to design a lightweight, flexible input scaffold that is also adaptable as the model matured.

Action

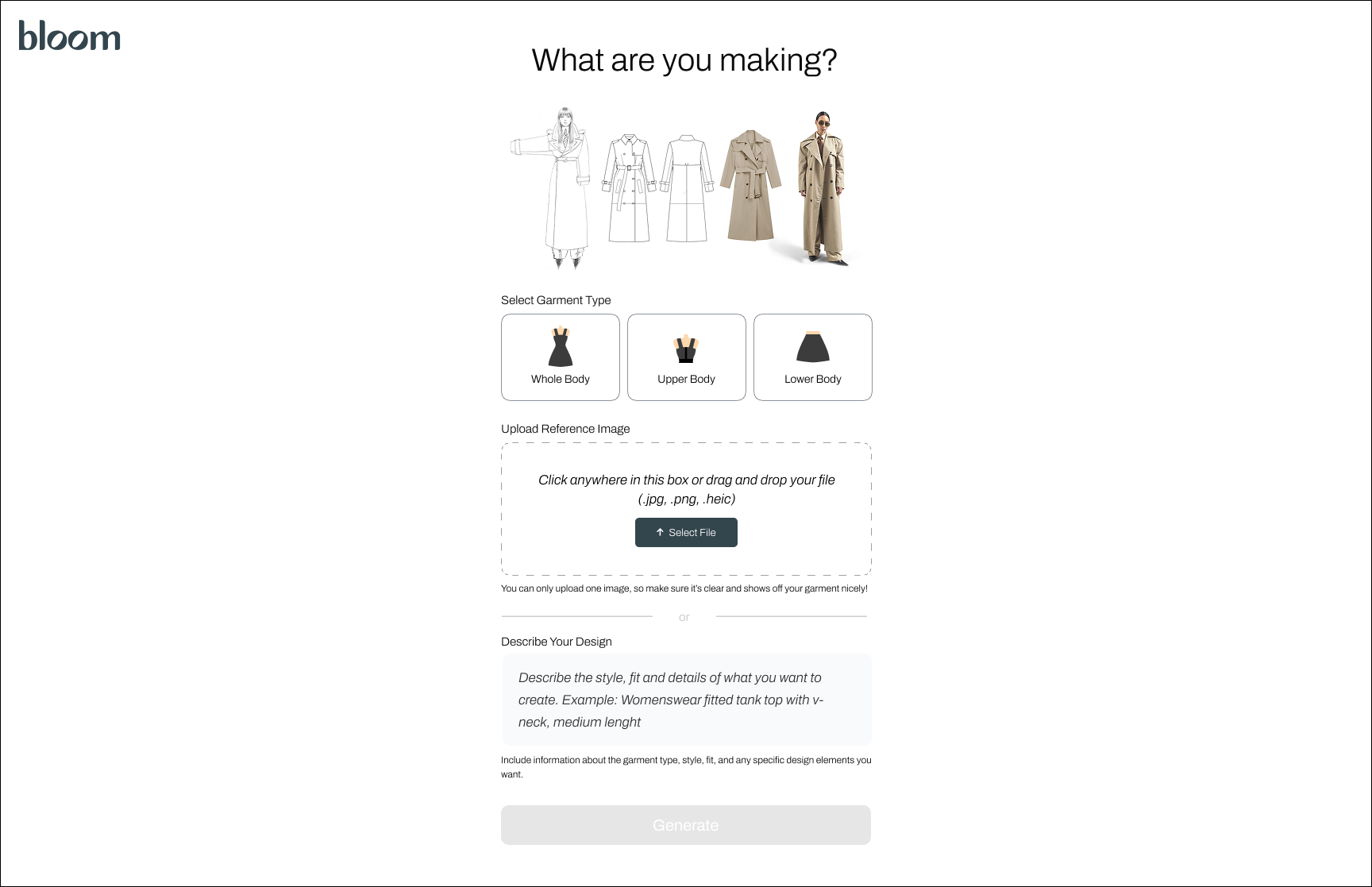

We began with a traditional static layout: a single page crammed with all input fields at once. It overwhelmed users and looked like a form.

The initial pre-generation screen.

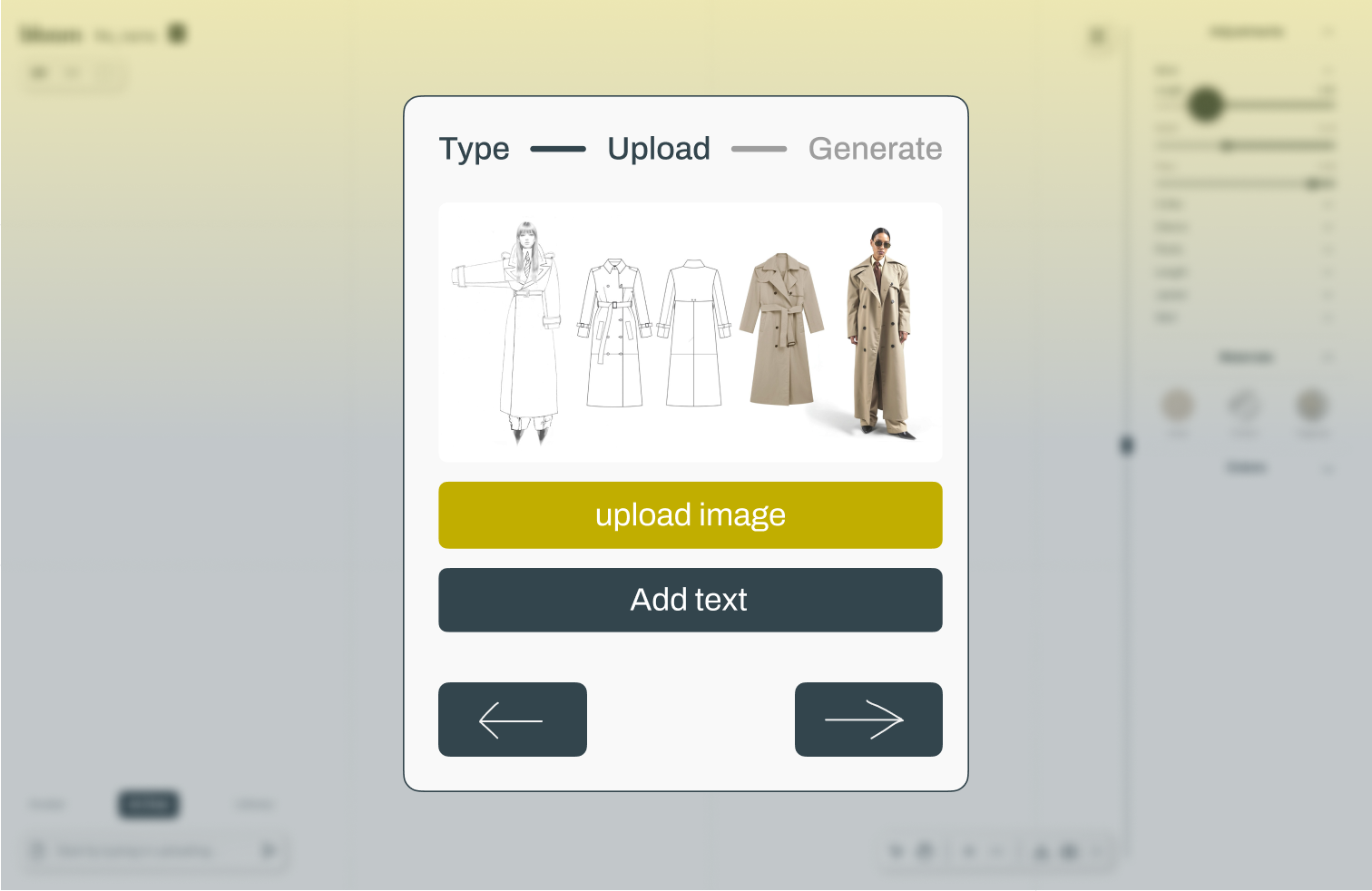

Through testing and iteration, we landed on two more dynamic alternatives: A pop-up multi-step flow, and a chat-based entry flow that scaffolded AI inputs through natural dialogue.

A pop-up modal input flow that incentivizes compeletion.

A dynamic chat pre-generation flow that echoes the conversational nature of fashion design while naturally setting up parameters for the generation.

Result

The final input flow reduced user drop-off, gave the AI better structured context, and created a sense of creative momentum early on. Instead of feeling like a form, it felt like the start of a co-creation process.

Takeaway

Designing with AI often means designing around AI. Guardrails aren't limits—they're opportunities to scaffold creativity and build trust in the system.

3. UX Research at an AI Startup

What happens when the product leads–and you have to find the problem?

Bloom began with a clear technical capability: generate production-ready garment patterns from prompts. But we didn't yet know who this tool was really for—or what problem it solved best.

Part of our research targets the LLM itself. Indexing the generated results helped us getting to know "the elephant" better.

Task

Initial interviews asked users what they wanted. The answers were vague, and often contradicted their actual workflows. We realized that in AI-first products, users often can't articulate what they're missing, because the capability itself is new. I needed to shift from feature testing to problem discovery.

Action

I shifted to a Jobs-to-be-Done framework, focusing on:

- What users were trying to accomplish

- What was slowing them down

- What workarounds they used to fill the gaps

- What costs them most money

Instead of asking "How would you like to use this?" I asked "Walk me through the last time you had to create a pattern fast."

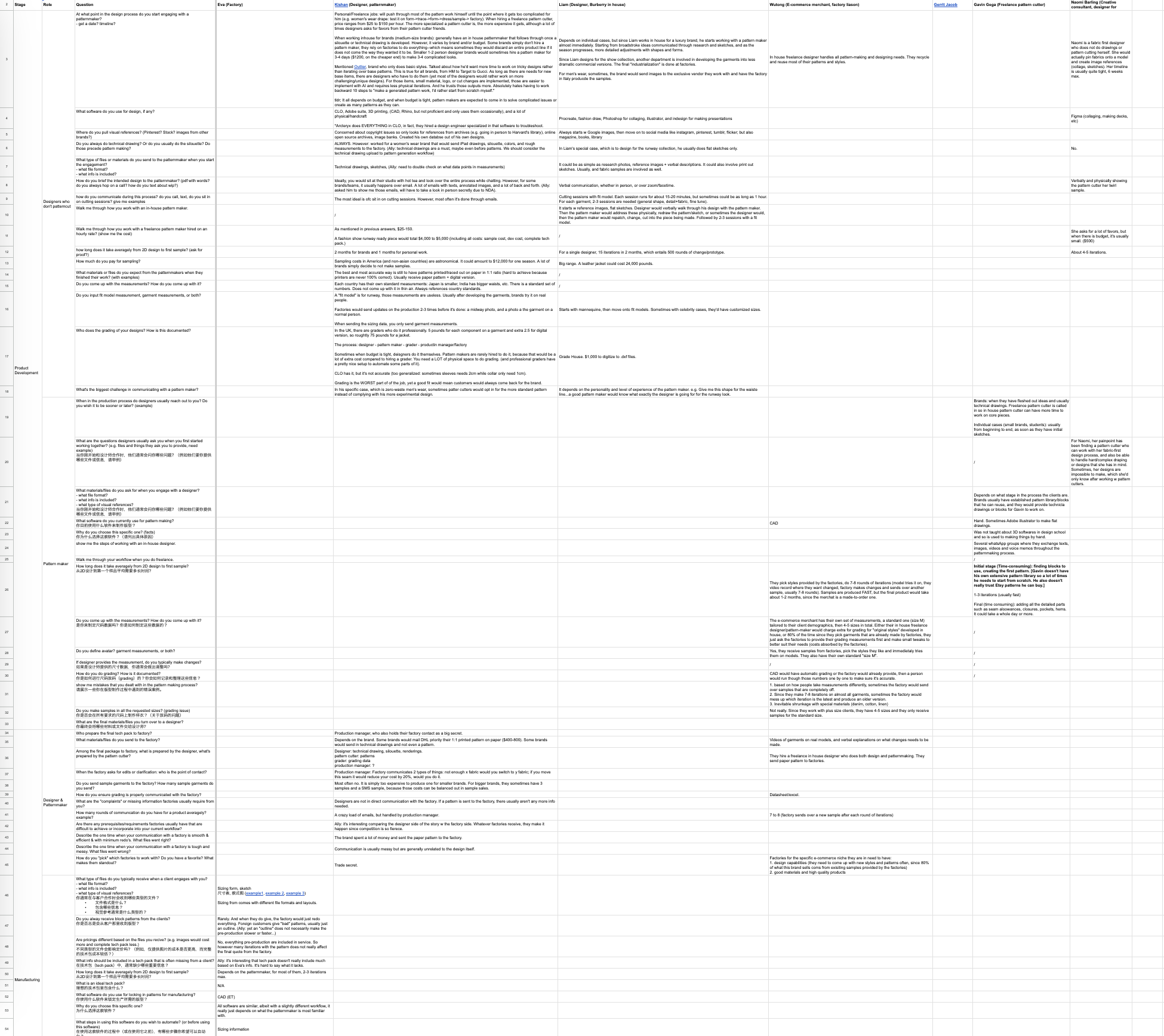

Partial screenshot of transcripts from interviews we conducted.

I also led a segmentation process to identify our Ideal Customer Profile (ICP):

- Freelance pattern makers in small-to-mid-sized studios

- Designers managing their own sampling without access to full tech packs

- Solo practitioners and early-stage brands needing fast iteration with limited budget for technical specialists

Result

This reframed Bloom as a time-saving assistant for early-stage production—not just an AI generator. It clarified our users, sharpened our priorities, and aligned features with real pain points.

Takeaway

For many AI startups, research isn't about validating features—it's about reverse-engineering the problem the product might already be solving.

Future Considerations

Looking ahead, there's potential to extend the tool's utility with features that better support iterative and collaborative workflows, including:

- Version control for tracking design iterations and reverting changes

- A smart style system that applies consistent design and pattern logic across garments within the same collection

- Real-time collaboration to support teams working across roles (e.g., designers + pattern makers)

These additions would move Bloom closer to being a full creative workspace—not just a generation tool.